Unpacking tuple-like textfile

Unpacking tuple-like textfile

Given a textfile of lines of 3-tuples:

(0, 12, Tokenization) (13, 15, is) (16, 22, widely) (23, 31, regarded) (32, 34, as) (35, 36, a) (37, 43, solved) (44, 51, problem) (52, 55, due) (56, 58, to) (59, 62, the) (63, 67, high) (68, 76, accuracy) (77, 81, that) (82, 91, rulebased) (92, 102, tokenizers) (103, 110, achieve) (110, 111, .) (0, 3, But) (4, 14, rule-based) (15, 25, tokenizers) (26, 29, are) (30, 34, hard) (35, 37, to) (38, 46, maintain) (47, 50, and) (51, 56, their) (57, 62, rules) (63, 71, language) (72, 80, specific) (80, 81, .) (0, 2, We) (3, 7, show) (8, 12, that) (13, 17, high) (18, 26, accuracy) (27, 31, word) (32, 35, and) (36, 44, sentence) (45, 57, segmentation) (58, 61, can) (62, 64, be) (65, 73, achieved) (74, 76, by) (77, 82, using) (83, 93, supervised) (94, 102, sequence) (103, 111, labeling) (112, 114, on) (115, 118, the) (119, 128, character) (129, 134, level) (135, 143, combined) (144, 148, with) (149, 161, unsupervised) (162, 169, feature) (170, 178, learning) (178, 179, .) (0, 2, We) (3, 12, evaluated) (13, 16, our) (17, 23, method) (24, 26, on) (27, 32, three) (33, 42, languages) (43, 46, and) (47, 55, obtained) (56, 61, error) (62, 67, rates) (68, 70, of) (71, 75, 0.27) (76, 77, ?) (78, 79, () (79, 86, English) (86, 87, )) (87, 88, ,) (89, 93, 0.35) (94, 95, ?) (96, 97, () (97, 102, Dutch) (102, 103, )) (104, 107, and) (108, 112, 0.76) (113, 114, ?) (115, 116, () (116, 123, Italian) (123, 124, )) (125, 128, for) (129, 132, our) (133, 137, best) (138, 144, models) (144, 145, .) The goal is to achieve two different data types:

sents_with_positions: a list of list of tuples where the the tuples looks like each line of the textfilesents_words: a list of list of string made up of only the third element in the tuples from each line of the textfile

E.g. From the input textfile:

sents_words = [ ('Tokenization', 'is', 'widely', 'regarded', 'as', 'a', 'solved', 'problem', 'due', 'to', 'the', 'high', 'accuracy', 'that', 'rulebased', 'tokenizers', 'achieve', '.'), ('But', 'rule-based', 'tokenizers', 'are', 'hard', 'to', 'maintain', 'and', 'their', 'rules', 'language', 'specific', '.'), ('We', 'show', 'that', 'high', 'accuracy', 'word', 'and', 'sentence', 'segmentation', 'can', 'be', 'achieved', 'by', 'using', 'supervised', 'sequence', 'labeling', 'on', 'the', 'character', 'level', 'combined', 'with', 'unsupervised', 'feature', 'learning', '.') ] sents_with_positions = [ [(0, 12, 'Tokenization'), (13, 15, 'is'), (16, 22, 'widely'), (23, 31, 'regarded'), (32, 34, 'as'), (35, 36, 'a'), (37, 43, 'solved'), (44, 51, 'problem'), (52, 55, 'due'), (56, 58, 'to'), (59, 62, 'the'), (63, 67, 'high'), (68, 76, 'accuracy'), (77, 81, 'that'), (82, 91, 'rulebased'), (92, 102, 'tokenizers'), (103, 110, 'achieve'), (110, 111, '.')], [(0, 3, 'But'), (4, 14, 'rule-based'), (15, 25, 'tokenizers'), (26, 29, 'are'), (30, 34, 'hard'), (35, 37, 'to'), (38, 46, 'maintain'), (47, 50, 'and'), (51, 56, 'their'), (57, 62, 'rules'), (63, 71, 'language'), (72, 80, 'specific'), (80, 81, '.')], [(0, 2, 'We'), (3, 7, 'show'), (8, 12, 'that'), (13, 17, 'high'), (18, 26, 'accuracy'), (27, 31, 'word'), (32, 35, 'and'), (36, 44, 'sentence'), (45, 57, 'segmentation'), (58, 61, 'can'), (62, 64, 'be'), (65, 73, 'achieved'), (74, 76, 'by'), (77, 82, 'using'), (83, 93, 'supervised'), (94, 102, 'sequence'), (103, 111, 'labeling'), (112, 114, 'on'), (115, 118, 'the'), (119, 128, 'character'), (129, 134, 'level'), (135, 143, 'combined'), (144, 148, 'with'), (149, 161, 'unsupervised'), (162, 169, 'feature'), (170, 178, 'learning'), (178, 179, '.')] ] I have been doing it by:

- iterating through each line of the textfile, process the tuple, and then appending them to a list to get

sents_with_positions - and while appending each process sentence to

sents_with_positions, I append the last elements of the tuples for each sentence tosents_words

Code:

sents_with_positions = [] sents_words = [] _sent = [] for line in _input.split('\n'): if len(line.strip()) > 0: line = line[1:-1] start, _, next = line.partition(',') end, _, next = next.partition(',') text = next.strip() _sent.append((int(start), int(end), text)) else: sents_with_positions.append(_sent) sents_words.append(list(zip(*_sent))[2]) _sent = [] But is there a simpler way or cleaner way to do achieve the same output? Maybe through regexes? Or some itertools trick?

Note that there are cases where there're tricky tuples in the lines of the textfile, e.g.

(86, 87, ))# Sometimes the token/word is a bracket(96, 97, ()(87, 88, ,)# Sometimes the token/word is a comma(29, 33, Caf)# The token/word is a unicode (sometimes accented), so [a-zA-Z] might be insufficient(2, 3, 2)# Sometimes the token/word is a number(47, 52, 3,000)# Sometimes the token/word is a number/word with comma(23, 29, (e.g.))# Someimtes the token/word contains bracket.

Answer by Kasramvd for Unpacking tuple-like textfile

You can use regex and deque which is more optimized when you are dealing with huge files:

import re from collections import deque sents_with_positions = deque() container = deque() with open('myfile.txt') as f: for line in f: if line != '\n': try: matched_tuple = re.search(r'^\((\d+),\s?(\d+),\s?(.*)\)\n$',line).groups() except AttributeError: pass else: container.append(matched_tuple) else: sents_with_positions.append(container) container.clear() Answer by unutbu for Unpacking tuple-like textfile

Parsing text files in chunks separated by some delimiter is a common problem. It helps to have a utility function, such as open_chunk below, which can "chunkify" text files given a regex delimiter. The open_chunk function yields chunks one at a time, without reading the whole file at once, so it can be used on files of any size. Once you've identified the chunks, processing each chunk is relatively easy:

import re def open_chunk(readfunc, delimiter, chunksize=1024): """ readfunc(chunksize) should return a string. http://stackoverflow.com/a/17508761/190597 (unutbu) """ remainder = '' for chunk in iter(lambda: readfunc(chunksize), ''): pieces = re.split(delimiter, remainder + chunk) for piece in pieces[:-1]: yield piece remainder = pieces[-1] if remainder: yield remainder sents_with_positions = [] sents_words = [] with open('data') as infile: for chunk in open_chunk(infile.read, r'\n\n'): row = [] words = [] # Taken from LeartS's answer: http://stackoverflow.com/a/34416814/190597 for start, end, word in re.findall( r'\((\d+),\s*(\d+),\s*(.*)\)', chunk, re.MULTILINE): start, end = int(start), int(end) row.append((start, end, word)) words.append(word) sents_with_positions.append(row) sents_words.append(words) print(sents_words) print(sents_with_positions) yields output which includes

(86, 87, ')'), (87, 88, ','), (96, 97, '(') Answer by LeartS for Unpacking tuple-like textfile

This is, in my opinion, a little more readable and clear, but it may be a little less performant and assumes the input file is correctly formatted (e.g. empty lines are really empty, while your code works even if there is some random whitespace in the "empty" lines). It leverages regex groups, they do all the work of parsing the lines, we just convert start and end to integers.

line_regex = re.compile('^\((\d+), (\d+), (.+)\)$', re.MULTILINE) sents_with_positions = [] sents_words = [] for section in _input.split('\n\n'): words_with_positions = [ (int(start), int(end), text) for start, end, text in line_regex.findall(section) ] words = tuple(t[2] for t in words_with_positions) sents_with_positions.append(words_with_positions) sents_words.append(words) Answer by Padraic Cunningham for Unpacking tuple-like textfile

If you are using python 3 and you don't mind (87, 88, ,) becoming ('87', '88', ''), you can use csv.reader to parse the values removing the outer () by slicing:

from itertools import groupby from csv import reader def yield_secs(fle): with open(fle) as f: for k, v in groupby(map(str.rstrip, f), key=lambda x: x.strip() != ""): if k: tmp1, tmp2 = [], [] for t in v: a, b, c, *_ = next(reader([t[1:-1]], skipinitialspace=True)) tmp1.append((a,b,c)) tmp2.append(c) yield tmp1, tmp2 for sec in yield_secs("test.txt"): print(sec) You can fix the with if not c:c = "," as the only way it will be an empty string is if it is a , so you will get ('87', '88', ',').

For python2 you just need to slice the first three elements to avoid an unpack error:

from itertools import groupby, imap def yield_secs(fle): with open(fle) as f: for k, v in groupby(imap(str.rstrip, f), key=lambda x: x.strip() != ""): if k: tmp1, tmp2 = [], [] for t in v: t = next(reader([t[1:-1]], skipinitialspace=True)) tmp1.append(tuple(t[:3])) tmp2.append(t[0]) yield tmp1, tmp2 If you want all the data at once:

def yield_secs(fle): with open(fle) as f: sent_word, sent_with_position = [], [] for k, v in groupby(map(str.rstrip, f), key=lambda x: x.strip() != ""): if k: tmp1, tmp2 = [], [] for t in v: a, b, c, *_ = next(reader([t[1:-1]], skipinitialspace=True)) tmp1.append((a, b, c)) tmp2.append(c) sent_word.append(tmp2) sent_with_position.append(tmp1) return sent_word, sent_with_position sent, sent_word = yield_secs("test.txt") You can actually do it by just splitting also and keep any comma as it can only appear at the end so t[1:-1].split(", ") will only split on the first two commas:

def yield_secs(fle): with open(fle) as f: sent_word, sent_with_position = [], [] for k, v in groupby(map(str.rstrip, f), key=lambda x: x.strip() != ""): if k: tmp1, tmp2 = [], [] for t in v: a, b, c, *_ = t[1:-1].split(", ") tmp1.append((a, b, c)) tmp2.append(c) sent_word.append(tmp2) sent_with_position.append(tmp1) return sent_word, sent_with_position snt, snt_pos = (yield_secs()) from pprint import pprint pprint(snt) pprint(snt_pos) Which will give you:

[['Tokenization', 'is', 'widely', 'regarded', 'as', 'a', 'solved', 'problem', 'due', 'to', 'the', 'high', 'accuracy', 'that', 'rulebased', 'tokenizers', 'achieve', '.'], ['But', 'rule-based', 'tokenizers', 'are', 'hard', 'to', 'maintain', 'and', 'their', 'rules', 'language', 'specific', '.'], ['We', 'show', 'that', 'high', 'accuracy', 'word', 'and', 'sentence', 'segmentation', 'can', 'be', 'achieved', 'by', 'using', 'supervised', 'sequence', 'labeling', 'on', 'the', 'character', 'level', 'combined', 'with', 'unsupervised', 'feature', 'learning', '.'], ['We', 'evaluated', 'our', 'method', 'on', 'three', 'languages', 'and', 'obtained', 'error', 'rates', 'of', '0.27', '?', '(', 'English', ')', ',', '0.35', '?', '(', 'Dutch', ')', 'and', '0.76', '?', '(', 'Italian', ')', 'for', 'our', 'best', 'models', '.']] [[('0', '12', 'Tokenization'), ('13', '15', 'is'), ('16', '22', 'widely'), ('23', '31', 'regarded'), ('32', '34', 'as'), ('35', '36', 'a'), ('37', '43', 'solved'), ('44', '51', 'problem'), ('52', '55', 'due'), ('56', '58', 'to'), ('59', '62', 'the'), ('63', '67', 'high'), ('68', '76', 'accuracy'), ('77', '81', 'that'), ('82', '91', 'rulebased'), ('92', '102', 'tokenizers'), ('103', '110', 'achieve'), ('110', '111', '.')], [('0', '3', 'But'), ('4', '14', 'rule-based'), ('15', '25', 'tokenizers'), ('26', '29', 'are'), ('30', '34', 'hard'), ('35', '37', 'to'), ('38', '46', 'maintain'), ('47', '50', 'and'), ('51', '56', 'their'), ('57', '62', 'rules'), ('63', '71', 'language'), ('72', '80', 'specific'), ('80', '81', '.')], [('0', '2', 'We'), ('3', '7', 'show'), ('8', '12', 'that'), ('13', '17', 'high'), ('18', '26', 'accuracy'), ('27', '31', 'word'), ('32', '35', 'and'), ('36', '44', 'sentence'), ('45', '57', 'segmentation'), ('58', '61', 'can'), ('62', '64', 'be'), ('65', '73', 'achieved'), ('74', '76', 'by'), ('77', '82', 'using'), ('83', '93', 'supervised'), ('94', '102', 'sequence'), ('103', '111', 'labeling'), ('112', '114', 'on'), ('115', '118', 'the'), ('119', '128', 'character'), ('129', '134', 'level'), ('135', '143', 'combined'), ('144', '148', 'with'), ('149', '161', 'unsupervised'), ('162', '169', 'feature'), ('170', '178', 'learning'), ('178', '179', '.')], [('0', '2', 'We'), ('3', '12', 'evaluated'), ('13', '16', 'our'), ('17', '23', 'method'), ('24', '26', 'on'), ('27', '32', 'three'), ('33', '42', 'languages'), ('43', '46', 'and'), ('47', '55', 'obtained'), ('56', '61', 'error'), ('62', '67', 'rates'), ('68', '70', 'of'), ('71', '75', '0.27'), ('76', '77', '?'), ('78', '79', '('), ('79', '86', 'English'), ('86', '87', ')'), ('87', '88', ','), ('89', '93', '0.35'), ('94', '95', '?'), ('96', '97', '('), ('97', '102', 'Dutch'), ('102', '103', ')'), ('104', '107', 'and'), ('108', '112', '0.76'), ('113', '114', '?'), ('115', '116', '('), ('116', '123', 'Italian'), ('123', '124', ')'), ('125', '128', 'for'), ('129', '132', 'our'), ('133', '137', 'best'), ('138', '144', 'models'), ('144', '145', '.')]] Answer by GsusRecovery for Unpacking tuple-like textfile

I've read many good answers, some of them using approaches next to what i've used when i read the question. Anyway i think i've added something to the subject so i've decided to post.

Abstract

My solution is based on a single line parsing approach to handle files that won't easily fit in memory.

The line decodification is made by an unicode-aware regex. It parses both lines with data and empty ones to be aware of the end of current section. This made the parsing process os-agnostic in spite of the specific line-separator (\n, \r, \r\n).

Just to be sure (when handling big files you can never know) i've also added fault-tolerance to exceeding spaces or tabs in the input data.

e.g. lines such as: ( 0 , 4, rck ) or ( 86, 87 , )) are both parsed correctly (see more below in the regex breakout section and the output of the online demo).

Code Snippet ? Ideone demo

import re words = [] positions = [] pattern = re.compile(ur'^ (?: [ \t]*[(][ \t]* (\d+) [ \t]*,[ \t]* (\d+) [ \t]*,[ \t]* (\S+) [ \t]*[)][ \t]* )? $', re.UNICODE | re.VERBOSE) w_buffer = [] p_buffer = [] # automatically close the file handler also in case of exception with open('file.input') as fin: for line in fin: for (start, end, token) in re.findall(pattern, line): if start: w_buffer.append(token) p_buffer.append((int(start), int(end), token)) else: words.append(tuple(w_buffer)); w_buffer = [] positions.append(p_buffer); p_buffer = [] if start: words.append(tuple(w_buffer)) positions.append(p_buffer) # An optional prettified output import pprint as pp pp.pprint(words) pp.pprint(positions)

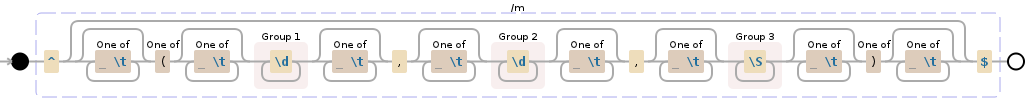

Regex Breakout ? Regex101 Demo

^ # Start of the string (?: # Start NCG1 (Non Capturing Group 1) [ \t]* [(] [ \t]* # (1): A literal opening round bracket (i prefer over '\(')... # ...surrounded by zero or more spaces or tabs (\d+) # One or more digits ([0-9]+) saved in CG1 (Capturing Group 1) # [ \t]* , [ \t]* # (2) A literal comma ','... # ...surrounded by zero or more spaces or tabs (\d+) # One or more digits ([0-9]+) saved in CG2 # [ \t]* , [ \t]* # see (2) # (\S+) # One or more of any non-whitespace character... # ...(as [^\s]) saved in CG3 [ \t]* [)] [ \t]* # see (1) )? # Close NCG1, '?' makes group optional... # ...to match also empty lines (as '^$') $ # End of the string (with or without newline) Answer by buckley for Unpacking tuple-like textfile

I found this a good challenge to do in a single replace regex.

I got the first part of your Q working leaving out some edge cases and stripping away non essential details.

Below is a screenshot of how far I got using the excellent RegexBuddy tool.

Do you want a pure regex solution as this or look for solutions which use code to process intermediate regex results.

If you are looking for a pure regex solution I don't mind spending more time on to cater for the details.

Answer by Nizam Mohamed for Unpacking tuple-like textfile

Each line of the text looks similar to a tuple. If the last components of the tuples were quoted, they could be evald. That's exactly what I've done, quoting the last component.

def quote_last(line): comma = ',' second_comma = line.index(comma, line.index(comma)+1) + 1 rest, last = line[:second_comma], line[second_comma:] #in case the last component has '"' in it if '"' in last: last = last.replace('"',r'\"') return '{} "{}")'.format(rest,last.strip()[:-1]) def get_tuples_and_strings(lines): positions, words, tuples = [], [], [] for line in lines: line = line.strip() if line: line = quote_last(line) t = eval(line) tuples.append(t) else: positions.append(tuples) words.append([t[-1] for t in tuples]) tuples = [] if tuples: positions.append(tuples) words.append([t[-1] for t in tuples]) return positions, words sents_with_positions, sents_words = get_tuples_and_strings(lines) Fatal error: Call to a member function getElementsByTagName() on a non-object in D:\XAMPP INSTALLASTION\xampp\htdocs\endunpratama9i\www-stackoverflow-info-proses.php on line 72

0 comments:

Post a Comment